Creating Usable 3D Models with Generative AI

We’ve built the first, 3D Generative AI that creates 3D models from text prompts that are directly usable within games and other 3D applications.

Source: Masterpiece Studio

To really understand what this means and the impact this will have on 3D creation - right now, as well as in the future - we’re going to need to toggle between two different modes of granularity: high and low levels.

At a high level, we’re reimagining what it means to be a 3D creator. We’re focused on building effortless experiences for anyone who wants to create 3D. That starts with rethinking how we should approach even creating 3D in the first place. Our modern approach rests on three key pillars: Generate, Edit, Share.

At a low level, this means that we need to simultaneously hide complexity, but make it controllable by a creator in a simple way. This means imagining new product experiences designed to help more people easily express themselves with 3D models. To do this, there will be a few (optional) sections further on that will walk you through what’s going on behind-the-scenes to make all this magic possible.

So why is this a big deal?

We believe that by empowering more people to create 3D objects, assets, and experiences, we’re expanding people’s capacity to create useful content and new economic opportunities for all people.

That’s why our mission is to unlock 1 billion 3D creators.

Today’s generative 3D models are not usable in games and real-time environments without requiring an experienced 3D modeler for corrections.

The companies, teams and researchers working in this space are doing absolutely incredible work - we’re looking forward to collaborating much closer with all of them in the near future. However, today, they aren’t (yet!) producing the same kind of game-ready 3D assets that are needed for to run in games and production real-time environments.

At Masterpiece Studio, we’ve built the first generative AI to create game-ready 3D objects. This includes a platform with an entire toolset to help creators generate 3D content that is usable in games today, and then in the future, we’ll expand to training, education, social media, and commerce.

To enable creators to use 3D generative AI today in a games and other virtual environment, our approach follows three stages:

We’ll explain each of these and show how it works in our software.

Step 1 - Generate

Creates the content, often a text prompt but can be other methods (like photos, videos or 2D graphics). Text prompts are imperfect, but are a good starting point.

Source: Masterpiece Studio

Step 2 - Edit

Enables the refinement of the content. This corrects issues in the originally generated content and helps adjust the content to meet exact needs.

Source: Masterpiece Studio

Step 3 - Share

Allows the content to be interoperable so that it can be deployed in a game, virtual world, 3D platform, or other 3D project.

Source: Masterpiece Studio

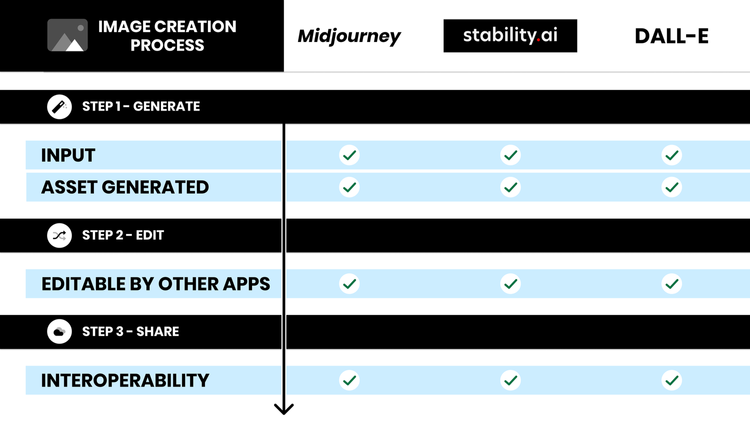

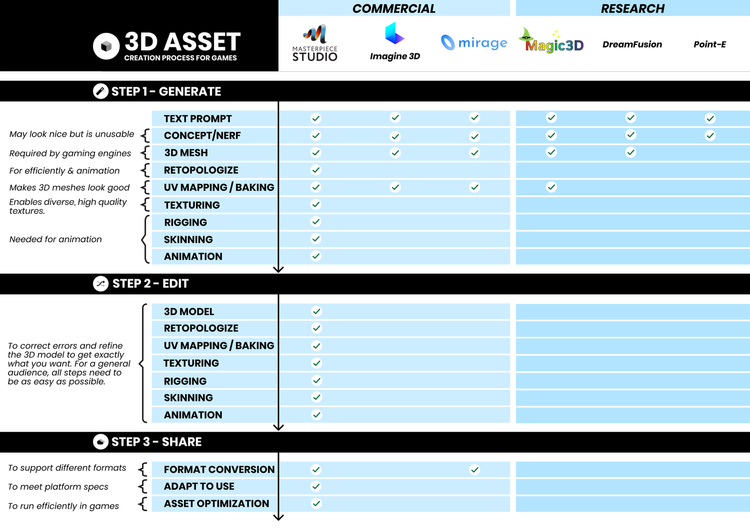

Comparing Different 3D Generative AI Projects

Below is a comparison of the most popular generative AI projects creating 3D models using text prompts:

Source: Masterpiece Studio

As a reminder, all of these companies are doing outstanding work and will all have great value, so I’d encourage people to check out other projects as well as these projects will develop further and more projects will enter the scene.

Read on as we break down some of the key elements here - and why this is a true game changer for creatives.

For the remainder of this post, we’ll be jumping back and forth between the high level (what’s so powerful about game-ready generative AI made 3D models) and the lower level (to explain most of the elements that go into making this possible, in a usable way)

If you would rather skip all the details and just check it out, you can join our waitlist here, where we’ll excitedly welcome you into our community (and app as soon as we can)!

If you want to help us shape the future of 3D creation and you’re skilled in 3D algorithms and machine learning, feel free to send your resume directly to careers@masterpiecestudio.com.

Or if you’re interested in non-machine learning roles, you can check out our Careers page on our website for other opportunities.

There are 3 billion gamers who interact with 3D models and 1 billion gamers on platforms that directly support and encourage importing 3D models.

Gaming technology is causing a rapid expansion of 3D and is becoming common across industries, including entertainment, education, training, social media, and commerce.

Most of the major tech companies are building 3D platforms and there are thousands of smaller ones that are building niche platforms as well.

Source: Masterpiece Studio

Within the next five years, most of the 5 billion internet users will be on one or more of these platforms, enabling them to use 3D models to better work, play, and communicate.

Sounds great, but there is a big problem! 99% of users on these platforms cannot create 3D models.

They can’t create because:

Creation tools are WAY too complex

Most people aren’t trained to be professional artists

Interoperability is a significant issue in 3D

This is extremely frustrating for users, but nothing captures this better than quotes from real people who have tried to learn:

“The process is so tedious and frustrating”

”I feel depressed because it’s very overwhelming”

But what if these three problems were solved and 3D creation became effortless? How about we help more people generate, edit, and deploy 3D assets tailored to their needs?

Photos and videos have rapidly become part of our everyday lives over the last 20 years. Thanks to support from most platforms, new technologies such as smartphones, and easy-to-use software to create them (including generative AI), this form of media is everywhere!

And the same pattern is already repeating itself for 3D assets, as we’ve mentioned, in part, thanks to gaming.

““After text and audio, graphics is the final frontier.””

So how do we unlock 3D creation for the masses?

We need to reimagine - today and tomorrow’s creators - what creating 3D will mean with all kinds of new tools and techniques (some yet to emerge) to make 3D creation easy and widely accessible.

However, before we dive deeper into the 3D creation process, let’s outline a few concepts first and show how generative AI works for 2D images:

Source: Masterpiece Studio

Step 1 - The image is generated.

Step 2 - The generated image can be edited with inpainting and outpainting, and then it can be touched up in photo editing software.

Step 3 - this is mostly free, since JPEGs and PNGs are universally compatible.

Note: as we’ll see later, this isn’t free for all media types.

Now let’s compare this to 3D objects - these are models that are needed for games, experience, training, the AR filters on social media, those nice images you look at that have perspective and countless others:

Source: Masterpiece Studio

Without getting into the weeds, here are some important points:

While both Image and 3D generation share the same core phases, it’s clear that it’s much more complex when it comes to 3D models.

Generating images is a much simpler problem to solve, and the ecosystem of resources needed to tackle this problem is much more mature and readily available.

Generative 3D AI is less mature, but holds great promise for revolutionizing 3D content creation

For generative 3D media to become really valuable, it needs to be editable & remixable (more on this in future posts!). At Masterpiece Studio, we already have these tools and infrastructure - we’ll be able to automatically add more depth to images and more utility to any 3D generative models.

The big takeaway is that at Masterpiece Studio, we’ve developed the first game-ready, generative 3D AI and this is a game changer for any 3D creative!

If you’re interested in going further into why this is such a game changer, in this next section, we’ll dive in deep and explain why.

For most people, the above might be enough info. However, if you’re a creator who likes to know the why, if you’re a startup founder who’s trying to build useful products, or if you’re an investor looking to invest in this space, then keep reading.

Let’s break down the key phases and what's happening at each step.

STEP 1 - GENERATE

To generate a textured and animated 3D model that is game-ready, it can’t just be 3D and look nice (as is the case with NeRFs). In order to run in a game or virtual environment, it needs to be a 3D mesh with good topology, UV mapped, texture painted, rigged, skinned, and with keyframe animation.

Source: Masterpiece Studio

Concept: With the first two elements (Input and Concept / NeRF / Point Cloud) you’re basically left with a (potentially) good looking 3D object in the best case or not great looking 3D object in the worst case, but either way, it’s not even remotely usable or even compatible in any game or standard 3D platform.

3D Model: If we want to use the 3D model in a game (or really anywhere), at an absolute minimum it needs to be a 3D mesh (which is fancy terminology for a 3D object that’s made up of a bunch of polygons).

Retopologize: Games run on all kinds of hardware and have strict performance requirements so just having any type of 3D mesh isn’t enough. To make the object run with good performance, it needs good retopology (fancy word for changing how the polygons are arranged and how many there are) to be efficient. Reducing the number of polygons makes it more efficient in the game and having them arranged well is needed for good animation (we’ll cover that part later).

UV Mapping & Texture Baking: Now that you have a good mesh, it’s time to make that mesh look good! Putting the colors directly on the mesh (vertex painting) looks terrible for game-ready objects. To make it look good, you have to put a texture image on it, which is called a UV Map. To understand this, think about a globe of the world and imagine you wanted to flatten the sphere so that it can lie flat like a regular map. To do this, you would cut it up into pieces and squish it flat. Bingo! You’ve made a UV Map. Next, you want to take the (hopefully) nice looking NeRF or 3D model that you started with and then copy that to the UV map (this is called Texture Baking). Now you have a good looking 3D model.

Texturing: The process of drawing texture onto the 3D model - this is where you get detail of how it looks. This step is very useful for making the look high quality. Also, since generating textures is much faster than generating the underlying 3D model, it can allow you to iterate much quicker.

Rigging: A rig is a skeleton that you put into the 3D model. It gives the 3D model structure during animation. For the generate phase, this needs to be automatic so creators don’t have to worry about it.

Skinning: When people, animals, monsters move, their skin tends to move and stretch, especially at the bone joints, so this is needed for quality animation. Skinning is the process of attaching the rig (aka skeleton) to the mesh (aka the skin). Once again, we want this process to be automatic so that creators don’t need to worry about it.

Animation: To make the 3D model move in a natural way, we want to generate animations. To make them usable, the animations are adapted to the rig that is used. The animations move the bones, and the skinning info moves the mesh. What do you get out of it? A nice animation.

By this part of the process you’ve now actually made a real, game-ready 3D model using generative AI!

As we’ve hinted at throughout this post, generating 3D assets using AI is incredible - but it’s only one part of the equation.

Generative AI can get you close to what you want, but like anything that’s been generated with current AI tools, you’ll need to modify it in order to remove any defects and to really personalize it for your own needs.

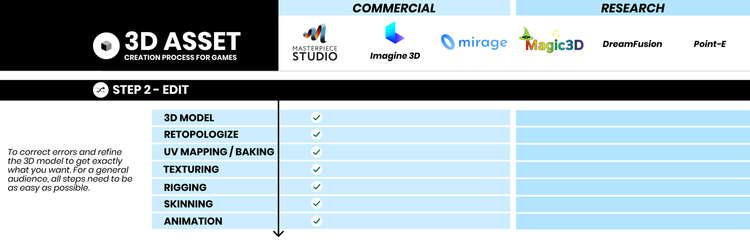

STEP 2 - EDIT

This is where the Editing phase comes in, which is even more important for 3D generative AI than 2D generative AI. Since there are less 3D models to train from compared to text and images and because there are more steps to generate, it’s even more important to have a good editing process.

Skipping this step will make even good looking models largely useless in real world situations. Editing in other apps isn't an option either, since all other full pipeline 3D modeling apps are extremely complicated and take many years to learn, let alone master.

In order to make any of this more accessible to everyday creators, it is critical the software guides them through these steps and that everything is made extremely fast, easy and most importantly, fun!

With Masterpiece Studio, you’ll soon have all the tools you need (assisted by in-app AI to breeze through any hard parts) to add more precision and control over the 3D models generated with our tools.

We’re also working hard to ensure that we’re able to support any generated model from any other tool, allowing you to import them into our software in order to refine and optimize the model as if it was made with our tools!

Source: Masterpiece Studio

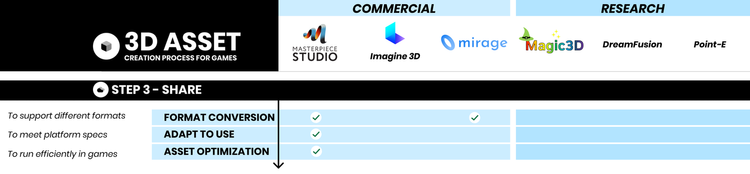

STEP 3 - SHARE

Finally, the third and final phase - Share - is about all the steps required for interoperability.

Instead of creating 3D assets for one-off use cases, if we’re able to generate, edit and automatically optimize or convert the assets - on-demand - then each 3D asset becomes even more valuable!

There are over 1,000 companies in the Metaverse Standards Forum focused on or using some form of 3D assets and 3D model interoperability was voted the single biggest problem plaguing the 3D industry!

This isn’t just a simple process of supporting and converting formats, there are deep changes that are needed to the 3D model in order to enable it to be supported by the individual requirements of the game or platform.

Source: Masterpiece Studio

Format Conversion: This is needed to support the file format for the game or platform.

Adapt to Use: This is where we do deep structural changes to the representation of the model to support the computer graphics specs of the game. For example, perhaps the model only supports vertex colors or needs a different size of texture, or perhaps it requires a different reference frame or different bone naming structure.

Asset Optimization: Games run on all kinds of different hardware, so it needs deep optimization on the number of polygons and many other characteristics to run efficiently on every device, from web, desktop, tables, VR/AR, phones, and consoles.

We never want the creator to have to worry about many of these details, so we focus on doing them automatically based on where the user wants to use the object.

Putting all these stages together with Masterpiece Studio, 3D creators will soon be able to:

Use AI to generate a structured, game-ready 3D model,

Edit any aspect of the 3D model - mesh, look (texture), and movement (animation).

Use it over and over again by automatically converting or optimizing it on-demand for any needs they might face!

Generative AI is a game changer for 3D game creators, and very soon, for anyone who wants to create 3D!

At the end of the day, it’s about replacing complex or traditional workflows with fun, effortless ways to make 3D assets that exceed today’s needs and expectations.

The more people we can empower to make the 3D models they want, in less time - the greater opportunity we’ll all unlock for everyone!

So come join our community, join our waitlist, and see for yourself just how much fun exploring the future of 3D creation together can be! If you want to help us shape the future of 3D creation and you’re skilled in 3D algorithms and machine learning, feel free to send your resume directly to careers@masterpiecestudio.com.

Or if you’re interested in non-machine learning roles, you can check out our Careers page on our website for other opportunities.